Verification, validation, and calibration through causal inference

While typical validation and verification approaches focus

on identifying the associations between data elements using statistical

and machine learning methods, the novel methods in

this paper focus instead on identifying causal relationships between

data elements. Statistical and machine-learning-based

approaches are strictly data-driven, meaning that they provide

quantitative comparison measures between data sets without explicitly

considering the hypotheses behind them. This can lead to

the erroneous conclusion that, if two data sets are close enough,

the models that generated them are similar. In addition, when

experimental and simulated data differ to an extent that fails to

meet the acceptance criteria, calibration techniques are used to

tweak simulation model parameters to reduce the gap between

the two types of data. This produces the false expectation that

a simulation model will match reality. The methods presented

in this paper move away from these strictly data-driven methods

for validation and calibration toward more robust, model-driven

methods based on causal inference. Causal inference aims to

identify the possible mechanisms that might have generated data.

Thus, this analysis targets the prediction of the effects when one

(or more) of the identified mechanisms are altered. There are

many approaches to identify, quantify, and illustrate causal relationships.

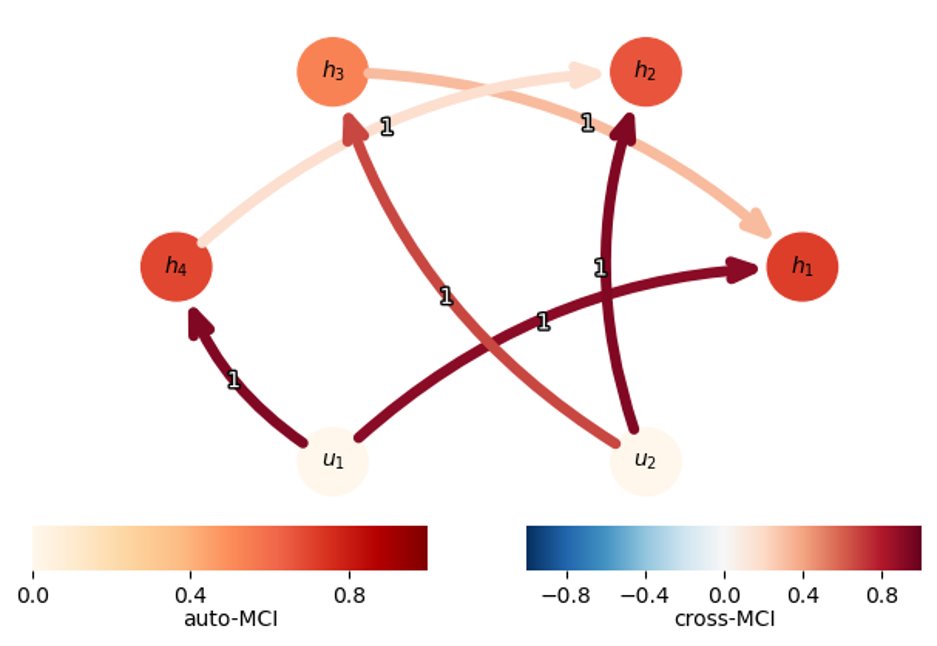

Directed graphs are

employed as causal models. If the directed graph lacks cycles,

it is known as a directed acyclic graph. A node in such a graph

represents an observed data element while a directed edge connecting

two nodes represents a causal relationship between two

variables. The developed causal methods are designed to extract

causal models from simulation models and experimental data.

Causal models capture the causal relationships between data elements

(e.g., simulated and experimental data). In this context,

validation and verification are performed by comparing causal

models. The proposed approach does not only inform system

analysts on how a simulation model matches real-world data, but

also identifies elements of the simulation model that should be

revised when discrepancies between simulation and experimental

data are observed. Through these causal methods, analysts

can identify the portion of the model equation(s) that are behind

an edge connecting two variables. Hence, once the structural

differences between causal models have been determined, model

calibration can occur by changing only those model parameters

that impact the identified causal relationships.

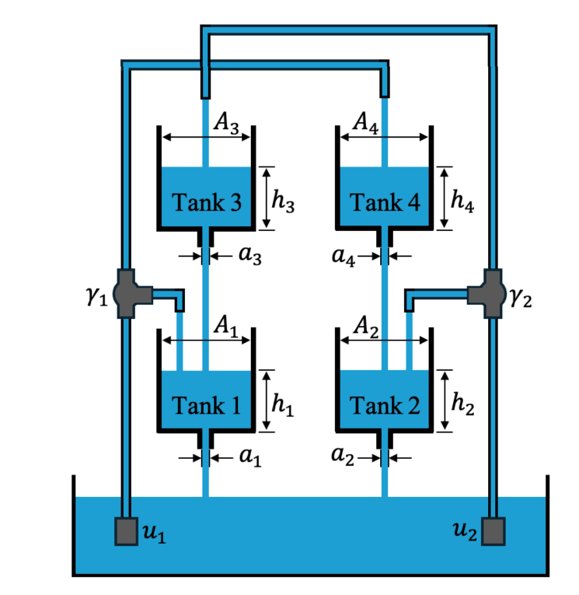

Original system

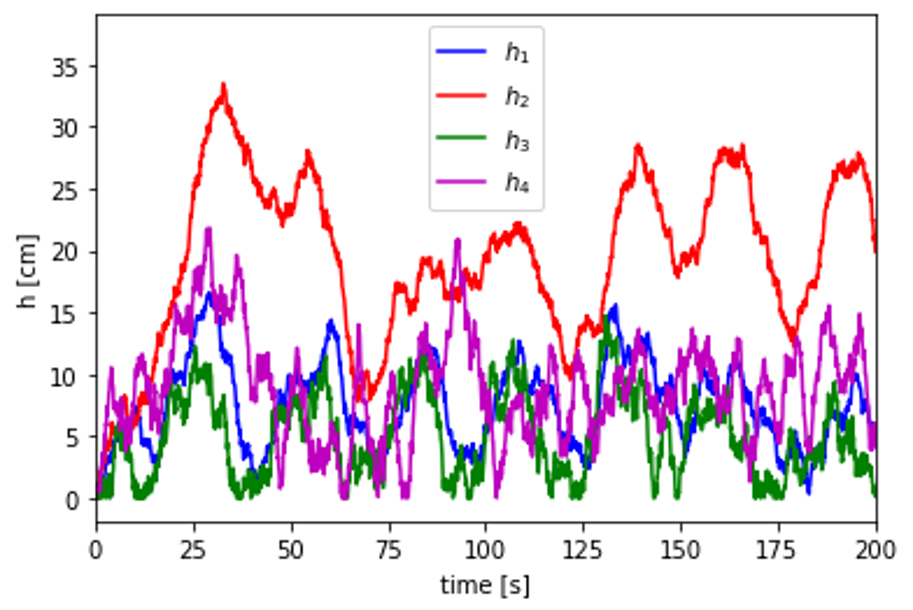

Observed dynamics

Structural causal model